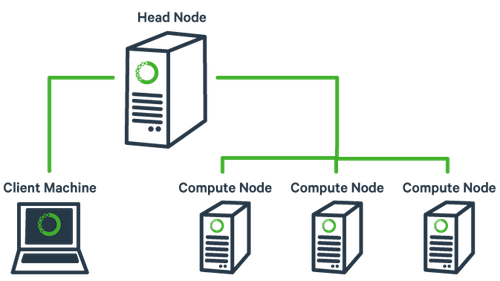

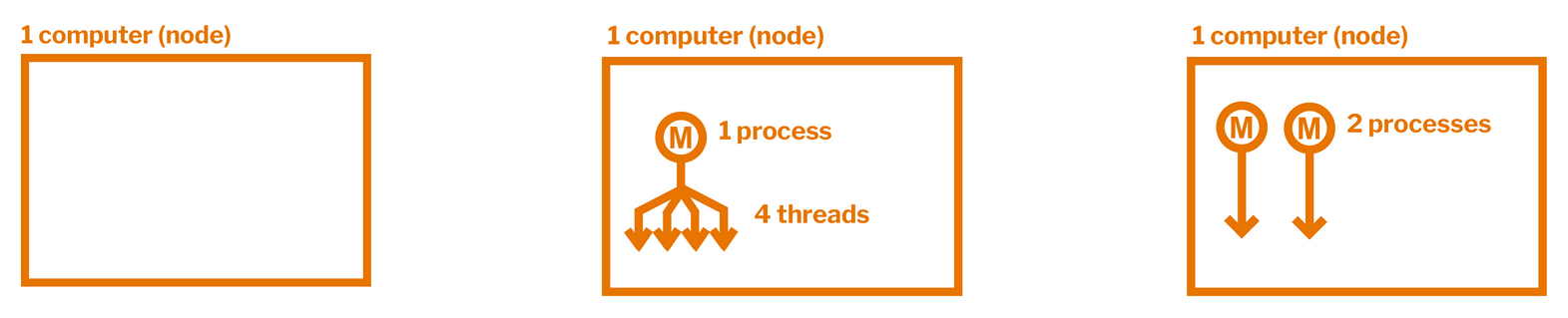

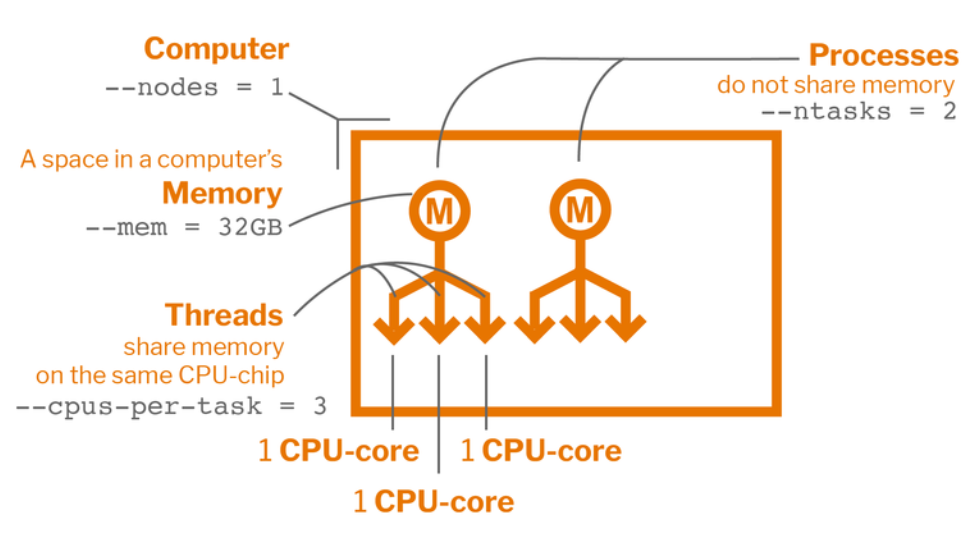

class: center, middle, inverse, title-slide .title[ # Remote servers and research computing ] .subtitle[ ## Command line interface, ssh, and parallelization ] .author[ ### Guillaume Falmagne ] .date[ ### <br> Nov. 11th, 2023 ] --- # Why Use a Remote Server? .pull-left[ #### 1. Computational Resources - Remote servers often have powerful and abundant hardware, making them ideal for resource-intensive tasks (data analysis, machine learning, scientific simulations...) #### 2. Data Storage and Backup - Remote servers provide centralized storage and backup solutions, ensuring data safety and accessibility from anywhere. #### 3. Collaboration - Remote servers enable collaboration among team members, allowing multiple users to access and work on shared projects simultaneously. ] .pull-right[ #### 4. Access From Anywhere - You can access your remote server from anywhere with an internet connection, making it convenient for remote work and travel. #### 5. Access To Anywhere (remote) - A remote server allows you to perform tasks on a machine located elsewhere, which can be useful for accessing specific software or environments. ] --- # Why Use a Remote Server? .pull-left[ #### 6. Scalability - Remote servers are scalable, so you can easily adjust resources based on the computation and storage needs of each project. #### 7. Security - Remote servers can be configured with strict security measures to protect sensitive data, reducing the risk of data breaches. ] .pull-right[ #### 8. Server Administration - If you need to manage servers or perform administrative tasks, remote servers provide direct access to system settings and configurations. #### 9. Disaster Recovery - Remote servers often have robust disaster recovery plans, ensuring minimal downtime and data loss in case of hardware failures or other issues. ] --- # How to run my (intensive) code faster? .left-column60[ - **Parallelization** is the process of running **multiple tasks simultaneously on multiple CPUs** (or GPUs) to speed up computation. - Standard scientific computing ("runs big loops") runs better on CPUs. GPUs are perfect for machine learning tasks or when tasks are extremely parallel. Procedure: 1. Write code that can be **split into independent tasks** (either within the code, or preparing to run the code with multiple parameter values). 2. Communicate with a **job scheduler** (e.g., Slurm) to run the code on a cluster of computers. This requires: - **remote server** access (`ssh`) - `bash` (terminal) scripting. 3. **Monitor** the job and **retrieve** the files/results. ] .right-column60[ Cluster architecture:  ] --- # Multiple cores are not a magic code accelerator! - Run `top` on your (Mac/Linux) computer when running an intense task. You will see that usually only one core is used at a time for a certain task! - Clear intro to parallelization by [Princeton Research Computing](https://researchcomputing.princeton.edu/support/knowledge-base/parallel-code). Main concepts: - Need to write code that can be conceptually split into independent tasks - A **job** is a collection of **tasks** that can be run in parallel. - Tasks can run as independent **processes**, not sharing a common memory ("job arrays"). This is the easiest way to parallelize code (preparing tasks that can be explicitly ran independently). - Tasks can be ran as **threads**, where all threads share a common memory. Usually **1 thread** is used **per CPU core** of the same **node** (a.k.a. "computer"). OpenMP (for multi-thread) or MPI (for multi-node) are common libraries for this (quite technical...) .pull-left[  ] .pull-right[  ] --- # The Terminal .pull-left[ ### What is the Terminal? - The terminal, also known as the command line interface (CLI), is a text-based interface for interacting with a computer's operating system. - No graphical user interface (GUI), no mouse nor windows, just text commands. - MacOS terminal emulator: [https://iterm2.com/](https://iterm2.com/) - Windows terminal emulator: - [wsl](https://learn.microsoft.com/en-us/windows/wsl/install): Linux interface on Windows - [https://learn.microsoft.com/en-us/powershell/](https://learn.microsoft.com/en-us/powershell/): just a terminal - [https://www.putty.org/](https://www.putty.org/): For using remote servers ] .pull-right[  ] --- # Understanding Shells - In the context of the terminal, a **shell** is a **command-line interface** that **interprets and executes** commands. - Shells provide a way to interact with the operating system and run various programs. #### Bash (Bourne-Again Shell) - Bash is one of the most popular and widely used shells. - It is the **default shell** on most Linux distributions and macOS. - Known for its scripting capabilities, Bash is versatile and user-friendly. #### Zsh (Z Shell) - Zsh is an **extended version of Bash** with additional features. - It offers powerful auto-completion, customization options, and theme support. - Zsh is often favored by power users and developers. #### Fish (Friendly Interactive Shell) - [Fish](https://fishshell.com/) is designed to be **user-friendly** and easy to use → good for beginners. - Unique syntax highlighting feature + interactive auto-suggestions. - Simplicity and discoverability. #### Change shells using the command `chsh -s /bin/bash` --- # Improving shells Themes, auto-completion, plugins, and much more ### For bash: - [github.com/ohmybash/oh-my-bash](https://github.com/ohmybash/oh-my-bash) ### For zsh: - [github.com/ohmyzsh/ohmyzsh](https://github.com/ohmyzsh/ohmyzsh) ### For fish: - [github.com/oh-my-fish/oh-my-fish](https://github.com/ohmyzsh/ohmyzsh) --- # Configuration Files for Shells - Shells can be customized by using configuration files. - They usually have names starting with `.` (which makes them hidden in ls), and ending in `rc`, for 'run commands' - These files allow you to define environment variables, aliases, and customize your shell's behavior. ### Bash Configuration: `.bashrc` - In **Bash**, the `.bashrc` file is used for configuring user-specific settings. - It is executed **whenever you start an interactive Bash session**. - Common configurations include setting **aliases**, modifying the **PATH**, defining **shell variables**, and customizing the shell appearance. Think of using Oh-my-bash for advanced configurations. #### Example `.bashrc`: ```bash # Set an alias alias ll='ls -alh' # Add a directory to the PATH export PATH=$PATH:/usr/local/bin ``` --- # Zsh Configuration: `.zshrc` Zsh uses the .zshrc file for user-specific configurations. - It provides a wide range of customization options, including theme selection, plugin management, and defining functions. - Oh-My-Zsh is good to manage Zsh configurations. Example `.zshrc`: ```zsh # Set Zsh theme ZSH_THEME="agnoster" # Load Oh-My-Zsh plugins plugins=(git docker python conda) ``` --- # Basic Terminal Operations: File System #### Creating Files and Directories - To create a file: `touch filename.txt` - To create a directory: `mkdir directory_name` #### Deleting, Moving, and Copying Files - Deleting a file: `rm filename.txt` - Moving a file: `mv sourcefile.txt destination/` - Copying a file: `cp sourcefile.txt destination/` (use `-r` for directories) #### Renaming Files - To rename a file: `mv oldfilename.txt newfilename.txt` #### Getting help - To get the documentations of a command: `man ls` --- # Basic Terminal Operations: Navigating #### Listing Files in a Directory - To list files in the current directory: `ls` (`-l` for long format, `-a` to include hidden files) - To list files in a specific directory: `ls /path/to/directory` #### Navigating Directories - Getting current directory: `pwd` - Changing to a directory: `cd directory_name` - Going up one directory: `cd ..` - Going up two directories: `cd ../..` - Going to the home directory: `cd ~` - Going to the last directory: `cd -` #### Finding Files - Searching for files: `find /path/to/search -name "filename"` - Searching for words in the file content: `grep -rn "word" file.txt` --- # Interacting with files #### Getting the contents of a file - Show the contents of a file: `cat file.txt` - Stream the content of a large file: `less long_file.txt` #### Creating and modifying files - Directing outputs to files with redirect: `>` - `ls -la > list_of_files.txt` - Repeat the argument: `echo "This is a test"` - Echo to new file: `echo "This is a test" > test_1.txt` - Append to an existing file with `>>` - Echo to existing file: `echo "This is a second test" >> test_1.txt` --- # The original pipe - The pipe operator (`|`) is a powerful tool in Unix-like terminal shells. - It allows you to chain multiple commands together, passing the output of one command as input to the next. - This enables efficient and flexible data processing. #### Example: Sorting, Uniqueness, and Word Count - Let's explore the pipe operator with a practical example. - We'll use the `sort`, `uniq`, and `wc` (word count) commands to demonstrate its functionality. ```bash ls | sort | uniq | wc -l ``` This command performs the following operations: 1. Lists the files in the current directory 2. Sorts the lines in alphabetical order. 3. Removes duplicate lines. 4. Counts the number of unique lines. --- # Terminal based text editors In addition to command-line tools, text editors are essential for working in the terminal. Usually based on a lot of **keyboard shortcuts** that should be learned... - Vim - A highly configurable and efficient text editor. - [vim.org](https://www.vim.org/), [neovim](https://neovim.io/) - Emacs - A powerful and extensible text editor. - [GNU Emacs](https://www.gnu.org/software/emacs/) - Nano - A simple and user-friendly text editor. - [Nano](https://www.nano-editor.org/) - Micro - A modern and intuitive terminal-based text editor. - [Micro](https://micro-editor.github.io/) - ed - A line-oriented text editor - [https://www.gnu.org/software/ed/](https://www.gnu.org/software/ed/) --- # Using SSH and Remote Servers #### Logging into a Remote Server The **secure shell protocol** (ssh) allows us to connect to the remote server. Authentication can use a password or the same key-pair we used for github - To log in using SSH: `ssh username@remote_server_ip` (same than github authentification) Setting up key-pair authentication: https://researchcomputing.princeton.edu/support/knowledge-base/connect-ssh #### Copying and Transferring Files - Copying a file to a remote server: `scp localfile.txt username@remote_server_ip:/path/to/destination/` #### Remote SSH in vscode - Take full advantage of usual VsCode UI but in a remote folder: [https://code.visualstudio.com/docs/remote/ssh](https://code.visualstudio.com/docs/remote/ssh) --- # Job Scheduling Servers #### What is a Job Scheduling Server? - A job scheduling server (e.g., Slurm) manages and schedules computing jobs on a cluster of computers. - https://researchcomputing.princeton.edu/support/knowledge-base/slurm #### Slurm Script ```bash #!/bin/bash #SBATCH --job-name=slurm-test # create a short name for your job #SBATCH --nodes=1 # node count #SBATCH --ntasks=1 # total number of tasks across all nodes #SBATCH --cpus-per-task=1 # cpu-cores per task (>1 if multi-threaded tasks) #SBATCH --mem-per-cpu=4G # memory per cpu-core (4G is default) #SBATCH --time=00:01:00 # total run time limit (HH:MM:SS) #SBATCH --mail-type=begin # send email when job begins #SBATCH --mail-type=end # send email when job ends #SBATCH --mail-user=<YourNetID>@princeton.edu module load R Rscript r_program.R ``` --- # Basic Slurm Commands - Submitting a job: `sbatch your_script.sh` - Checking job status: `squeue -u your_username` - Canceling a job: `scancel job_id` Usage warning: - Important **not to over-use resources** (cores, memory, time) on the cluster! - Typically, your future jobs will have a longer queue time if you have been using excessive resources or running inefficient (not parallel enough) code. --- # Princeton Research Computing - 125k cores in [Princeton Research Computing](https://researchcomputing.princeton.edu/) facilities - [Multiple systems](https://researchcomputing.princeton.edu/systems/systems-overview) with different use cases (most are using **slurm** for scheduling): - **Nobel** or **Adroit**: low-key, for testing parallel programs and for classes - **Della** or **Tiger**: large general-purpose clusters/grids for research. Usually a simple application from your PI is needed to get access. - **Stellar**: powerful cluster dedicated to mostly astrophysics, chemichal and biological engineering, and climate science. - Also has long-term (heavy) [storage](https://researchcomputing.princeton.edu/support/knowledge-base/data-storage) in `/projects` and `/tigress`